The Fractal Universe, Part 2

Given the recent discussion in comments on this blog I thought I’d give a brief update on the issue of the scale of cosmic homogeneity; I’m going to repeat some of the things I said in a post earlier this week just to make sure that this discussion is reasonable self-contained.

Our standard cosmological model is based on the Cosmological Principle, which asserts that the Universe is, in a broad-brush sense, homogeneous (is the same in every place) and isotropic (looks the same in all directions). But the question that has troubled cosmologists for many years is what is meant by large scales? How broad does the broad brush have to be? A couple of presentations discussed the possibly worrying evidence for the presence of a local void, a large underdensity on scale of about 200 MPc which may influence our interpretation of cosmological results.

I blogged some time ago about that the idea that the Universe might have structure on all scales, as would be the case if it were described in terms of a fractal set characterized by a fractal dimension . In a fractal set, the mean number of neighbours of a given galaxy within a spherical volume of radius

is proportional to

. If galaxies are distributed uniformly (homogeneously) then

, as the number of neighbours simply depends on the volume of the sphere, i.e. as

, and the average number-density of galaxies. A value of

indicates that the galaxies do not fill space in a homogeneous fashion:

, for example, would indicate that galaxies were distributed in roughly linear structures (filaments); the mass of material distributed along a filament enclosed within a sphere grows linear with the radius of the sphere, i.e. as

, not as its volume; galaxies distributed in sheets would have

, and so on.

We know that on small scales (in cosmological terms, still several Megaparsecs), but the evidence for a turnover to

has not been so strong, at least not until recently. It’s just just that measuring

from a survey is actually rather tricky, but also that when we cosmologists adopt the Cosmological Principle we apply it not to the distribution of galaxies in space, but to space itself. We assume that space is homogeneous so that its geometry can be described by the Friedmann-Lemaitre-Robertson-Walker metric.

According to Einstein’s theory of general relativity, clumps in the matter distribution would cause distortions in the metric which are roughly related to fluctuations in the Newtonian gravitational potential by

, give or take a factor of a few, so that a large fluctuation in the density of matter wouldn’t necessarily cause a large fluctuation of the metric unless it were on a scale

reasonably large relative to the cosmological horizon

. Galaxies correspond to a large

but don’t violate the Cosmological Principle because they are too small in scale

to perturb the background metric significantly.

In my previous post I left the story as it stood about 15 years ago, and there have been numerous developments since then, some convincing (to me) and some not. Here I’ll just give a couple of key results, which I think to be important because they address a specific quantifiable question rather than relying on qualitative and subjective interpretations.

The first, which is from a paper I wrote with my (then) PhD student Jun Pan, demonstrated what I think is the first convincing demonstration that the correlation dimension of galaxies in the IRAS PSCz survey does turn over to the homogeneous value on large scales:

You can see quite clearly that there is a gradual transition to homogeneity beyond about 10 Mpc, and this transition is certainly complete before 100 Mpc. The PSCz survey comprises “only” about 11,000 galaxies, and it relatively shallow too (with a depth of about 150 Mpc), but has an enormous advantage in that it covers virtually the whole sky. This is important because it means that the survey geometry does not have a significant effect on the results. This is important because it does not assume homogeneity at the start. In a traditional correlation function analysis the number of pairs of galaxies with a given separation is compared with a random distribution with the same mean number of galaxies per unit volume. The mean density however has to be estimated from the same survey as the correlation function is being calculated from, and if there is large-scale clustering beyond the size of the survey this estimate will not be a fair estimate of the global value. Such analyses therefore assume what they set out to prove. Ours does not beg the question in this way.

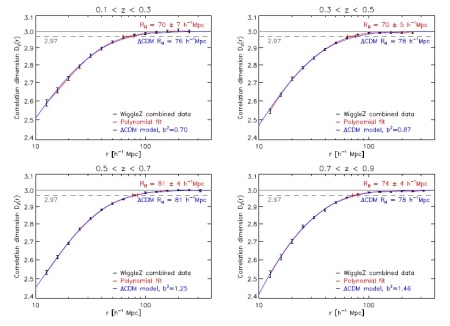

The PSCz survey is relatively sparse but more recently much bigger surveys involving optically selected galaxies have confirmed this idea with great precision. A particular important recent result came from the WiggleZ survey (in a paper by Scrimgeour et al. 2012). This survey is big enough to look at the correlation dimension not just locally (as we did with PSCz) but as a function of redshift, so we can see how it evolves. In fact the survey contains about 200,000 galaxies in a volume of about a cubic Gigaparsec. Here are the crucial graphs:

I think this proves beyond any reasonable doubt that there is a transition to homogeneity at about 80 Mpc, well within the survey volume. My conclusion from this and other studies is that the structure is roughly self-similar on small scales, but this scaling gradually dissolves into homogeneity. In a Fractal Universe the correlation dimension would not depend on scale, so what I’m saying is that we do not live in a fractal Universe. End of story.

Follow @telescoper

June 27, 2014 at 4:26 pm

A second opinion is that nature’s hierarchy appears to top out at about the point that our observational capabilities approach their limits. Were our observational capabilities change to allow observation of larger scales by a factor of, say, 10 to 1000 or more, then our conclusions regarding the extent to the hierarchy might be quite different. In a discrete fractal cosmos, and there are various fractal models with QUITE DIFFERENT properties from the usual assumption of continuous fractal modeling, the current observations could not be used to rule out a fractal cosmos.

A scientist does not say that the cosmos is not fractal, but rather takes the more scientific stand that the cosmos might be fractal but that within the limits of the anthropocentric observable universe nature’s hierarchy appears to have a turnover to a uniform distribution of matter on scales of about 80 Mpc, according to current but evolving estimates.

June 27, 2014 at 5:38 pm

The turnover to homogeneity happens well within current survey volumes.

I’m not sure I understand your later comment, which seems to say that if it’s shown not to be a fractal than it can still be a fractal if you define a fractal to be something other than a fractal.

June 27, 2014 at 6:58 pm

Peter, without taking sides over cosmological fractals, there are ‘free’ parameters in fractal models, ie values not specified by the model, which are to be estimated from the data.

June 27, 2014 at 9:48 pm

“Trivially, the universe could be fractal on scales much larger than the observable universe” — That does not make sense. By its very definition, if you take the definition seriously, a fractal applies at ALL scales. That is why we know the universe is not fractal.

June 28, 2014 at 12:20 am

Yes, that is why I asked Phillip on a previous thread what he meant by “fractal on large scales”. He answered: “a Charlier-style universe which is self-similar at all scales”, which probably means more to you than to me.

June 28, 2014 at 5:45 am

Why ask Mr. Helbig anything? He is a font of disinformation and unscientific absolute certainty. The Rush Limbaugh of cosmology.

GFR Ellis has apparently not considered discrete fractals, which are NOT self-similar at ALL scales, but only at discrete intervals of scale.

Should I repeat that for the hard of hearing? There is such as thing as a discrete fractals and examples are given in Mandelbrot’s seminal book.

Telescoper also seems to completely ignore my oft-repeated pleas for people to stop thinking that a fractal must be self-similar at all scales. Over and over again I have said, and Mandelbrot has demonstrated that the absence of obvious fractal structure (aside from the entire cosmic web which is highly fractal) does not mean the end of nature’s hierarchy, or that strong inhomogeneities cannot emerge again at a higher scale.

This is simple logic and natural philosophy and observations of the actual physical world ( where galaxies are NOT “point sources” [ shades of spherical cows]!)

Sigh, and sigh.

June 28, 2014 at 5:50 am

The definition of a fractal that I am familiar with is one that is self-similar on all scales. If you choose another definition, I suppose that’s up to you, but it doesn’t help. The hierarchical structure of the Universe is broken on small scales and large scales so it is not a fractal in the usual sense.

June 28, 2014 at 12:08 pm

There is a great deal of difference between a situation in which somebody proposes a model having ‘floating’ parameters ie parameters whose (fixed) values are to be estimated from the data (these estimates may of course be revised as technology reduces noise in data), and somebody whose proposed model is found to be inconsistent with data and who then ‘floats’ a parameter of that model – a parameter whose value had previously been asserted. The latter methodology is not invariably wrong, but flotation of parameters is a warning flag that the path to epicycles is being taken. As I understand it, both RLO and mainstreamers are accusing each other of this.

June 28, 2014 at 2:21 pm

Anton,

If you’re referring to the transition scale as a parameter, that’s not quite correct. It’s a derived quantity that is fixed when other parameters are known with sufficient accuracy. Now we have enough information we can predict various measures of the clustering pattern as a function of scale. In this case it matches well, though there remain question-marks over other measures.

Peter

June 28, 2014 at 5:23 pm

Peter, I wasn’t being remotely that specific!

June 29, 2014 at 5:10 am

In Mandelbrot’s book one of the archetypal fractals cited is the Koch curve. There is a discrete scale factor of 3 (or 1/3 depending on how you look at it) in the construction. So the building blocks of the Koch curve form a discrete self-similar hierarchy whose size differs by a factor of 3 relative to a neighboring level.

Of more relevance to cosmology is the intriguing toy model described by Fournier d’Albe (1907?) and discussed in Mandelbrot’s book The Fractal Geometry of Nature. The point F d’A was making was not to say the cosmos looked like his toy model, but rather to use the toy model to illustrate the interesting properties of discrete self-similar hierarchies.

So discrete self-similar hierarchical models come within the general definition of fractals, at least to me they do. If one insists that fractals must be self-similar on all scales, fine. Then forget the “fractal” characterization and call it an unbounded discrete self-similar paradigm, if you like. The hierarchy has the same properties regardless of the artificial semantic conditions one tries to impose upon it.

Interesting note: E.R. Harrison once told me that hierarchical cosmologies were a non-starter because they had a center of the Universe, and so violated the Copernican Principle. I tried to point out that the most natural hierarchical models, and those partially observed in nature, most certainly do not have such a center. I was shocked by his attitude toward hierarchical models. I have his unfortunate statement and related correspondence in print.

Another interesting note is that GFR Ellis told me a few years back that self-similarity probably did not play an important role in astrophysics or cosmology. Hmmmm, perhaps we live in different universes. I also have this in writing.

The bottom line is that Kuhn had it exactly right. Humans feel the need to hold on to their fundamental paradigms for dear life and to strongly resist any attempt to complicate things or consider fundamentally different assumptions. The idea of making a partial strategic retreat and then recreating a radically different paradigm based on a more secure empirical foundation fills them with insecurity and angst. They have spent decades studying the old paradigm; their status and daily bread come from not rocking the boat. Thankfully observers and experimentalists have different motivations.

June 29, 2014 at 6:10 am

People consider different assumptions all the time, and then reject them when they’re excluded by observational data. It’s called science. You should try it sometime.

June 29, 2014 at 4:39 pm

Thomas Kuhn was a fine historian of science, but a lousy philosopher of science when he sought to draw abstract principles from his observations in science’s history. It is true, for instance, that we have a totally different understanding of the electron in quantum mechanics than in late-19th century classical mechanics. But it is false that quantum mechanics replaced classical mechanics as arbitrarily as a change in clothing fashion, as Kuhn’s work suggests (although he wasn’t that explicit, because then his error would have been obvious. That was left to wild men like Feyerabend). QM enabled us to answer questions that classical didn’t and couldn’t, whereas the converse was not true. Our model might change underfoot radically, but our ability to predict improves continually.

What went wrong? Kuhn followed Popper (much as they disagreed) in rejecting inductive logic, yet inductive logic done correctly IS probability theory done correctly. Newtonian vs Einsteinian mechanics, for instance, is simply an Olympian case of comparative hypothesis testing using experimental data. Those data contain noise, of course, hence the relevance of probability theory. You say that the probability of Einstein in the light of the data is 0.999999 and the probability of Newton is 0.000001, and decide that Einstein is right. But if you reject induction, as Kuhn did, then you have no way of ranking theories/paradigms, and you say that they come and go arbitrarily. Nonsense!

June 30, 2014 at 4:28 pm

1. False.

2. Unscientifically *absolute* once again.

What an ignorant blowhard!

June 30, 2014 at 4:50 pm

I’m getting bored with this now. I’m closing comments on this piece.

June 30, 2014 at 5:21 am

Apparently there is a new book on the Faraday/Maxwell collaboration on EM.

https://www.sciencenews.org/article/%E2%80%98faraday-maxwell-and-electromagnetic-field%E2%80%99-biography-brilliance

The really nice thing about this chapter in the history of science is the separation of the conceptual/natural philosophy input from the analytical/ mathematics input since they were largely contributed by two different people with very different talents.

Usually these two inputs are intertwined within one person or within a collection of individuals, and it’s much harder to differentiate them.

Thanks for the thoughtful discussions. Time to focus on the wonders of World Cup 2014.