Arrows and Demons

My recent post about randomness and non-randomness spawned a lot of comments over on cosmic variance about the nature of entropy. I thought I’d add a bit about that topic here, mainly because I don’t really agree with most of what is written in textbooks on this subject.

The connection between thermodynamics (which deals with macroscopic quantities) and statistical mechanics (which explains these in terms of microscopic behaviour) is a fascinating but troublesome area. James Clerk Maxwell (right) did much to establish the microscopic meaning of the first law of thermodynamics he never tried develop the second law from the same standpoint. Those that did were faced with a conundrum.

The connection between thermodynamics (which deals with macroscopic quantities) and statistical mechanics (which explains these in terms of microscopic behaviour) is a fascinating but troublesome area. James Clerk Maxwell (right) did much to establish the microscopic meaning of the first law of thermodynamics he never tried develop the second law from the same standpoint. Those that did were faced with a conundrum.

The behaviour of a system of interacting particles, such as the particles of a gas, can be expressed in terms of a Hamiltonian H which is constructed from the positions and momenta of its constituent particles. The resulting equations of motion are quite complicated because every particle, in principle, interacts with all the others. They do, however, possess an simple yet important property. Everything is reversible, in the sense that the equations of motion remain the same if one changes the direction of time and changes the direction of motion for all the particles. Consequently, one cannot tell whether a movie of atomic motions is being played forwards or backwards.

This means that the Gibbs entropy is actually a constant of the motion: it neither increases nor decreases during Hamiltonian evolution.

But what about the second law of thermodynamics? This tells us that the entropy of a system tends to increase. Our everyday experience tells us this too: we know that physical systems tend to evolve towards states of increased disorder. Heat never passes from a cold body to a hot one. Pour milk into coffee and everything rapidly mixes. How can this directionality in thermodynamics be reconciled with the completely reversible character of microscopic physics?

The answer to this puzzle is surprisingly simple, as long as you use a sensible interpretation of entropy that arises from the idea that its probabilistic nature represents not randomness (whatever that means) but incompleteness of information. I’m talking, of course, about the Bayesian view of probability.

First you need to recognize that experimental measurements do not involve describing every individual atomic property (the “microstates” of the system), but large-scale average things like pressure and temperature (these are the “macrostates”). Appropriate macroscopic quantities are chosen by us as useful things to use because they allow us to describe the results of experiments and measurements in a robust and repeatable way. By definition, however, they involve a substantial coarse-graining of our description of the system.

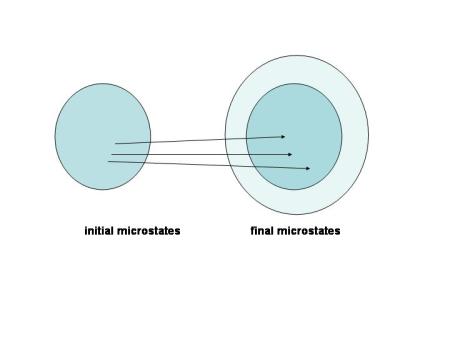

Suppose we perform an idealized experiment that starts from some initial macrostate. In general this will generally be consistent with a number – probably a very large number – of initial microstates. As the experiment continues the system evolves along a Hamiltonian path so that the initial microstate will evolve into a definite final microstate. This is perfectly symmetrical and reversible. But the point is that we can never have enough information to predict exactly where in the final phase space the system will end up because we haven’t specified all the details of which initial microstate we were in. Determinism does not in itself allow predictability; you need information too.

If we choose macro-variables so that our experiments are reproducible it is inevitable that the set of microstates consistent with the final macrostate will usually be larger than the set of microstates consistent with the initial macrostate, at least in any realistic system. Our lack of knowledge means that the probability distribution of the final state is smeared out over a larger phase space volume at the end than at the start. The entropy thus increases, not because of anything happening at the microscopic level but because our definition of macrovariables requires it.

This is illustrated in the Figure. Each individual microstate in the initial collection evolves into one state in the final collection: the narrow arrows represent Hamiltonian evolution.

However, given only a finite amount of information about the initial state these trajectories can’t be as well defined as this. This requires the set of final microstates has to acquire a sort of “buffer zone” around the strictly Hamiltonian core; this is the only way to ensure that measurements on such systems will be reproducible.

The “theoretical” Gibbs entropy remains exactly constant during this kind of evolution, and it is precisely this property that requires the experimental entropy to increase. There is no microscopic explanation of the second law. It arises from our attempt to shoe-horn microscopic behaviour into framework furnished by macroscopic experiments.

Another, perhaps even more compelling demonstration of the so-called subjective nature of probability (and hence entropy) is furnished by Maxwell’s demon. This little imp first made its appearance in 1867 or thereabouts and subsequently led a very colourful and influential life. The idea is extremely simple: imagine we have a box divided into two partitions, A and B. The wall dividing the two sections contains a tiny door which can be opened and closed by a “demon” – a microscopic being “whose faculties are so sharpened that he can follow every molecule in its course”. The demon wishes to play havoc with the second law of thermodynamics so he looks out for particularly fast moving molecules in partition A and opens the door to allow them (and only them) to pass into partition B. He does the opposite thing with partition B, looking out for particularly sluggish molecules and opening the door to let them into partition A when they approach.

The net result of the demon’s work is that the fast-moving particles from A are preferentially moved into B and the slower particles from B are gradually moved into A. The net result is that the average kinetic energy of A molecules steadily decreases while that of B molecules increases. In effect, heat is transferred from a cold body to a hot body, something that is forbidden by the second law.

All this talk of demons probably makes this sound rather frivolous, but it is a serious paradox that puzzled many great minds. Until it was resolved in 1929 by Leo Szilard. He showed that the second law of thermodynamics would not actually be violated if entropy of the entire system (i.e. box + demon) increased by an amount every time the demon measured the speed of a molecule so he could decide whether to let it out from one side of the box into the other. This amount of entropy is precisely enough to balance the apparent decrease in entropy caused by the gradual migration of fast molecules from A into B. This illustrates very clearly that there is a real connection between the demon’s state of knowledge and the physical entropy of the system.

By now it should be clear why there is some sense of the word subjective that does apply to entropy. It is not subjective in the sense that anyone can choose entropy to mean whatever they like, but it is subjective in the sense that it is something to do with the way we manage our knowledge about nature rather than about nature itself. I know from experience, however, that many physicists feel very uncomfortable about the idea that entropy might be subjective even in this sense.

On the other hand, I feel completely comfortable about the notion:. I even think it’s obvious. To see why, consider the example I gave above about pouring milk into coffee. We are all used to the idea that the nice swirly pattern you get when you first pour the milk in is a state of relatively low entropy. The parts of the phase space of the coffee + milk system that contain such nice separations of black and white are few and far between. It’s much more likely that the system will end up as a “mixed” state. But then how well mixed the coffee is depends on your ability to resolve the size of the milk droplets. An observer with good eyesight would see less mixing than one with poor eyesight. And an observer who couldn’t perceive the difference between milk and coffee would see perfect mixing. In this case entropy, like beauty, is definitely in the eye of the beholder.

The refusal of many physicists to accept the subjective nature of entropy arises, as do so many misconceptions in physics, from the wrong view of probability.

April 12, 2009 at 11:18 pm

I’ve reached the conclusion that although this is clearly the correct explanation there is a missing link in the argument, since a probability is an epistemological object that you can *never* measure, but experimental entropy *is* something you can infer from measurements. Can any “objective Bayesian” physicist (not a large category, alas) fill in that missing link? Even the best book by far on the subject, W. Tom Grandy’s “Entropy and the time evolution of macroscopic systems” (Oxford 2008) skates over this.

Anton

April 12, 2009 at 11:22 pm

Hi Peter

Interesting post. So are you saying that it is the demon’s very existence that counteracts the apparent violation, or the actual work he does each time he ‘knows’ when to open/close the door? I have always been a little confused by the this notion, but have accepted that since it is merely a thought experiment it doesn’t really violate laws.

Regards

Chris

April 13, 2009 at 3:09 am

Peter, there is a very interesting paper by Jaynes in 1965 in which he quotes Wigner as saying that “entropy is an anthropomorphic concept”. (This came up in the comment stream on my post about complexity ). Apparently what he was getting at was that you have a free choice in which are the parameters of interest describing the state of a system. The right choice depends on the experiment you wish to do. This sounds like almost the same thing as your point that entropy depends on your state of knowledge.

So I think you have made a strong case for entropy being subjective. But the problem remains that both the second law and the concept of probability already have an assumed arrow of time, so the Boltzmann argument to derive the former from the latter is circular. (I think Boltzmann knew this in his later papers.) So my bet would be on the cosmological arrow being the fundamental one.

April 13, 2009 at 5:30 am

There is another escape from the puzzle that the Gibbs entropy is a constant under Hamiltonian evolution — use the definition of entropy engraved on Boltzmann’s tombstone, S = k log W, where W is the volume of the region in phase space corresponding to the macrostate of the system. Then the entropy simply goes up with overwhelming probability, as long as you start in a low-entropy state.

Of course there is just as much subjectivity in the definition — someone has to decide what counts as a macrostate, which is defined as “the set of microstates that are macroscopically indistinguishable,” and we need to answer “indistinguishable to whom?” But the subjectivity enters once and for all in the choice of coarse-graining, and has nothing whatsoever to do with our knowledge of the system. (The whole setup works perfectly well even if we know the exact microstate and the exact Hamiltonian.)

April 13, 2009 at 8:49 am

Andy,

Yes, I should have mentioned Ed Jaynes by name in the post. I think this view of entropy owes a lot to him, and also to Claude Shannon. One should really call it the Gibbs-Shannon-Jaynes entropy, rather than just the Gibbs entropy.

Peter

April 13, 2009 at 9:31 am

I’m going to’out’ Peter as a follower of ET (Ed) Jaynes (as I am). To Andxyl: You don’t have a free choice of what thermodynamic variables to look at if you require your experiments relating these variables to be reproducible. For example, the relation between pressure and density (for a perfect gas this is Boyle’s law) is reproducible only at constant temperature. The relation between pressure, density and temperature is more widely reproducible (but even that goes pear-shaped for a polar gas such as NO2 in a strong electric field). It is up to *you* to decide when you are happy that you have macroscopic reproducibility. Then you consider the thermodynamic variables involved as probability (‘ensemble’) averages over microstates. Peter made this explicit above but the importance of thermodynamic reproducibilitry is still under-appreciated.

To Sean Carroll: the famous relation S = k log W is an asymptotic approximation to the mathematics of Jaynes’ ‘maximum entropy formalism’ in the limit of a large number of degrees of freedom (10^23 should certainly do). It’s all in that formalism, and Tom Grandy’s book (see my post above) is the best guide to it at an advanced level.

Let me repeat the bit of the argument that I still think is missing. In the maximum entropy formalism we treat thermodynamic variables as expectation values of quantities defined over the microstate (microstate: defined by 10^23 variables) with respect to the probability distribution of microstates. But an expectation value is still the ssame category of thing as a probability. (To make the point, the probability p_1 of the first face of a dice showing is the expectation of Kronecker-delta(i,1) where i denotes the face number.) And we Bayesians understand that probability is how strongly one bnary proposition is implied to be true upon supposing that another proposition is true. That is not an observable quantity.

Jaynes wrote about how macroscopic reproducibility circumvented th need for ergodic theorems (which aim to prove that time averages and probabilistic ‘ensemble’ averages are in general equal). I agree, but I think some steps in his reasoning need filling out.

Anton

April 13, 2009 at 10:10 am

That reminds me that I should sometime blog about ergodicity – a word that is constantly abused by cosmologists..

April 13, 2009 at 3:24 pm

Anton– sure, I completely agree. You can derive Boltzmann’s formula from the Gibbs/Shannon/Jaynes approach, and you wouldn’t need anywhere near 10^23 particles!

I was just trying to make a tiny conceptual point: you don’t have to define entropy in terms of our knowledge of the state of the system. You can if you want to, and that’s often the most convenient approach for actually calculating things, and Jaynes and his predecessors handed us a bunch of useful mathematical formalism. But you can also define entropy when you know the exact microstate, and keep knowing the exact microstate (have perfect information, in other words), by choosing a coarse-graining and counting how many other states look macroscopically the same.

The choice of where to locate the inevitable subjectivity is subjective, is all I’m saying. (Not a very deep point.)

April 13, 2009 at 4:35 pm

Sean: we have essentially converged. I prefer to avoid the word ‘subjective’ because I find it means different things to different people in this area of thought. (Ditto ‘random’ and even ‘probability’!)

Anton

April 13, 2009 at 5:18 pm

When a scientist says “the explanation is surprisingly simple” you know that you are in for a lot of trouble.

April 13, 2009 at 5:20 pm

I have to admit that I never understood any of this stuff at all when I was at University and it certainly didn’t help trying to read Gibbs’ own words on the subject which I found terribly confusing. In fact, I only started to make sense when I read a polemical article by Steve Gull (probably given to me by Anton). Jaynes’ papers on this which I subsequently found are wonderfully lucid and deeply thought out, although I agree with Anton that he didn’t quite succeed in his goal of ridding the subject of ensemble-speak. It doesn’t surprise me that there are still gaps in the logic since it is a subject that lies at the junction of so many different ways of thinking about the world.

April 13, 2009 at 5:47 pm

I agree with Anton that the word “subjective” is an unhelpful trigger word. To layfolk it means “can mean whatever you like” and even to physicists it is usually taken to imply “ill defined”. Neither of those applies to entropy. What we are after is “observer dependent” or something like that, or perhaps even better “experimenter dependent”.

April 13, 2009 at 6:42 pm

The missing bit of the argument has to be something to do with the frequency distribution – which *is* in pricniple observable – being numerically equal (though not conceptually identical) to the probability distribution, so far as macroscopic averaging goes.

Anton

April 14, 2009 at 12:05 am

So how does this change when we include quantum mechanics? The system is no longer fully deterministic and the micro states cannot ever be fully known. Does entropy become less subjective?

April 14, 2009 at 7:35 am

When the analysis is done quantum-mechanically you lose the conceptual clarity of Peter’s diagram above, but the implementation is not very different. Instead of taking integrals over 10^23-dimensional phase space of the product of the density function with (for example) the kinetic energy, you take the trace of the product of the density matrix with the kinetic energy operator. The mathematics is heavier because things don’t always commute and you have an extra parameter (Planck’s constant). Tom Grandy’s book is quantum-mechanical from the start, which is one reason I call it ‘advanced’.

Anton

April 14, 2009 at 6:38 pm

Yes but what I was getting at is the way entropy in a classical universe isn’t about the universe out there but is about our knowledge of it. Randomness is epistemological. Thats what makes it subjective in a sense.

But in QM randomness is irreducible. It isn’t just that we don’t know something about the system. In a deep sense its like the information does not exist anywhere. Randomness becomes ontological.

Classically cats are objectively either alive or dead and subjectively we may or may not know which. In QM the cat may not objectively be either alive or dead until we look.

April 14, 2009 at 10:12 pm

Dear ppnl

In the maximum entropy formalism you just average/marginalise over all uncertainty, whether quantum-mechanical or a result of not knowing the quantum state with certainty. The density matrix handles both uncertainties automatically.

But why do you assume that QM uncertainties are ontological? Don’t believe everything you have heard about “no hidden variable” theorems. All you can do is show that certain *categories* of hidden variable are inconsistent with the experimentally verified predictions of QM. For instance, Bell’s theorem shows that any underlying HVs must be nonlocal (no big deal – we have had “action at a distance” since Newton) and acausal (which deserves to be explored more). I don’t even like the name “hidden variables” because we can SEE their influence, when two systems with identical wavefunctions behave differently. In the 19th century physicists never said that maybe the jiggling of pollen grains seen under a microscope was “intrinsically random” – they supposed there was a reason for such “brownian motion”, and the richness of atomic theory was the result. Denial of hidden variables is a dead end.

Please don’t diss Peter’s very classical and rather unwell cat!

Cheers, Anton

April 15, 2009 at 2:01 am

I do not assume QM uncertainties are ontological. It is just the most common and simplest view.

I think they are called hidden variables because they cannot be measured and so cannot be used to make predictions. And nonlocal goes far beyond action at a distance. It is faster than light action and action back in time. You have to “hide” the variables in order to protect us from their effects.

But if the variables are hidden what makes you think they are there? In any case the randomness becomes irreducible even in principle and that irreducibility is what makes the randomness ontological.

April 15, 2009 at 7:25 am

There’s a difference between something being “ontological” and not being able to handle it with the current theoretical understanding. It is true that quantum mechanics has an irreducible unpredictability in it (although as Anton rightly states, this doesn’t make much difference to the argument I gave above). But that doesn’t mean that there can’t be a more complete theory. Quantum mechanics isn’t nature. It’s a successful way of describing some aspects of nature, but at some level it’s probably wrong.

April 15, 2009 at 11:50 am

I agree that QM will probably be wrong or incomplete at some level. That may be true of any theory ever put forward. But I think there is a danger here of trying to alter QM in a way to restore some kind of classical view of the world. I think that is misguided.

Hidden variables are a perfect example. Sure you can construct a theory with hidden variables. But you have to let them do magical things like time travel and then you have to hide them away to protect our world from the consequences of that magic. Thats just ugly. It is just an attempt to return to some kind of classical thinking.

I think we need a post on the quantum measurement problem.

April 15, 2009 at 12:36 pm

I don’t really agree. I think just declaring things to be “random” is a cop-out. Worse, it is anti-scientific. I keep an open mind about whether there might be a better (i.e. more complete) description at some level. If we assert that this doesn’t exist then we can be sure we’ll never find it.

It’s a matter of taste what you consider to be “ugly”. I think, however, that accepting the likelihood that the theory is incomplete is a more scientific attitude than asserting that quantum mechanics provides as complete a description as is possible in principle.

Also it doesn’t seem to me to be unreasonable to suppose that the causal structure of space-time vanishes in a quantum gravity (f there ever is one) in which case non-local correlations are not just possible but perhaps even mandatory.

April 15, 2009 at 6:46 pm

Hidden variables aren’t so hidden; you can see their effect when sysatems wioth identical wavefunctions behave differently. What we can’t do today is *control* them.

I agree with Peter; the job of theoretical physicists is to improve testable prediction. Saying you can’t do so is a dereliction of duty. If people had taken that line when Brownian motion was observed then we would still be stuck with pre-atomic 19th century physics. I agree that they have weird properties to be consistent with the successful predictions of QM. So be it!

Anton

April 16, 2009 at 12:50 am

I’m not just declaring anything random. I’m simply pointing out that this is the simplest position. There is no reason in evidence or logic to go beyond this. I have as open mind about a more complete theory as I do about anything with no evidence or reason to exist. Find the evidence and we will talk.

Hidden variables are very well hidden. They cannot be observed in order to make predictions. They cannot be used to transport information. If you assume they exist you have to assume they can communicate faster than light yet cannot be use to actually send signals.

No we cannot control them but the point is if we could we would be able to send messages back in time or faster than light. If we could see and control hidden variables we would be masters of time and space. Thats why I doubt they exist. Well maybe time travel is possible. Maybe faster than light communication is possible. Get back to me when you have the evidence.

I agree nonlocal correlations are mandatory. But nonlocal causation is not. Hidden variables is an attempt to construct a nonlocal cause for the correlation.

April 16, 2009 at 7:40 am

To ppnl: Why do *you* think that two systems having identical wavefunctions behave differently?

Anton

April 16, 2009 at 12:40 pm

“To ppnl: Why do *you* think that two systems having identical wavefunctions behave differently?”

If that is the way it is then that is just the way it is. Science only answers what questions not why questions. Why is the speed of light the same for all observers? It just is. The laws of physics could have been different. We looked and saw that they weren’t.

If you locate an asteroid within some error bounds you can construct a probability function that describes what you know about the location of that asteroid. As the asteroid progresses in its orbit the probability function evolves and changes over time. But that probability function isn’t a real thing. It is only a description of our knowledge. It is a mathematical ghost. The asteroid is assumed to have a real if unknown location in that probability function.

If you locate a subatomic particle in some error range you can construct a probability function for it as well. This function will also evolve with time. The construction is different and it evolves differently but it is still a probability function. The problem is that the particle cannot be assumed to have a well defined position before you look. In fact you can see the probability function as the real entity and the particle as the illusion with no existence apart from the interaction in which they were detected. Asking where they were before you looked is as nonsensical as asking which twin is “really” older in the relativity twin paradox. It is a formally meaningless question.

Quantum field theory is more explicit in that it treats particles as excitations of a field. They do not exist except where you interact with the field to detect the particle.

Quantum computers attempt to deal with those probability functions directly. The only way to do that is to maximize your ignorance of the actual state of the computer. This gives the computer a vast increase in computational power. In effect your ignorance has an objective and powerful effect on the computer. Again the probability function appears to be a real thing with real objective effects.

April 16, 2009 at 1:07 pm

I asked ppnl: “Why do *you* think that two systems having identical wavefunctions behave differently?” and was given the reply “If that is the way it is then that is just the way it is.”

Indeed. IF that is the way it is. But how do you know that that is the way it is if you decline to consider the question in the way scientists are supposed to: interplay between theory and experiment?

Anton

April 16, 2009 at 2:02 pm

I don’t know that that is the way it is. I don’t know that there isn’t a way to restore absolute time and space despite relativity. Science doesn’t deal in proofs. It is always contingent.

And I don’t know what you mean “decline to consider the question in the way scientists are supposed to: interplay between theory and experiment”

I’m perfectly willing to look at the results of any new experiment and consider any new theory. But at the same time I cannot ignore past work. Past work makes hidden variables problematic just as past work makes restoring absolute time and space problematic.

I have no philosophical problem with hidden variables. I’m just saying show me. And I am saying I have no philosophical problem with ontological randomness. Do you? If so you need to face the fact that the universe isn’t obliged to be bound by your philosophy.

The question is what do things look like now. I’m saying the randomness looks ontological and I have no problem with that.

April 16, 2009 at 6:41 pm

Don’t you want to improve the accuracy of testable prediction ppnl? Isn’t improvement in testable prediction the thing that makes progress in science different from how fashions in clothing change? You won’t and can’t achieve that without considering the possibility of HVs. No need to use long words that philosophers like!

Anton

April 16, 2009 at 7:43 pm

Don’t you want to improve the accuracy of testable prediction Anton? Isn’t improvement in testable prediction the thing that makes progress in science different from how fashions in clothing change? You won’t and can’t achieve that without considering the possibility of absolute time and space.

You can play that game with any theory.

I have considered hidden variables. I consider them problematic for the many reasons I have pointed out. You have so far failed to address any of those reasons.

You keep talking as if I’m the one with a philosophical agenda that makes me unable to consider an alternative. I think you have that backwards. I think your classical monkey brain is trying to shoehorn nonclassical phenomena into a classical pigeonhole.

And I still think someone needs to think about the quantum measurement problem.

April 16, 2009 at 8:07 pm

Now ppnl, let’s keep it civilized.

I think this particular discussion has run its course anyway.

April 16, 2009 at 8:13 pm

Stuff philosophy, I just want to know whether the next electron in my Stern-Gerlach apparatus will go up or down. Don’t you?

Anton

PS Agree that measurement in QM deserves closer scrutiny, but that’s another issue…

April 16, 2009 at 9:46 pm

Sorry if anything seemed uncivil. I didn’t intend it that way. Monkey brain is simply a reference to the fact that our brains evolved to keep a primate alive in an approximately classical world. It is attached to classical reasoning.

I would love to be able to tell you where your electron is going. That does not mean it is possible.

In a deep sense I don’t believe that the measurement problem is a different issue. After all that is where the probability function disappears.

April 16, 2009 at 9:59 pm

Thing is, the electron in my Stern-Gerlach apparatus knows nothing of classical or quantum reasoning. I can even phrase the whole thing entirely in apparatus terms, as “will the light on my upper or my lower measuring apparatus light up?” If you told a Greek philosopher that such a question was out of order, what might he reply?

Somewhere else on Peter’s blog I’ve suggested why 19th century scientists supposed there was a reason for Brownian motion but 20th century ones largely supposed there wasn’t a reason for quantum fluctuations. John Bell considered the question but thought it was something in Germanic culture (QM was largely a German invention). I think it is to do with the weakening of the Judaeo-Christian worldview which asserts that the world is comprehensible to man (a view not shared by other cultures).

Anton

April 17, 2009 at 6:01 pm

You keep focusing exclusively on the randomness of QM. For what its worth I would agree that doubting that there is an underlining mechanism explaining Browenian motion is somewhat pigheaded. (BTW do you have a reference?)

But QM is different in that the underlining mechanism would have to operate faster than light and travel in time. Then you have to take this unobserved process and actively hide it so that time travel or faster than light communication is never observed. This is a powerful reason to doubt any such mechanism.

This isn’t an impossible thing. After all modern theories treat space and time as an a priori background. Some future theory will have to explain how these things arise from physics. It may be possible to find your mechanism at that level. But I think it far far more likely that whatever theory we find applicable there will cause even more philosophical disquiet than mere randomness.

Science, if it doesn’t scare you then you aren’t doing it right.

April 17, 2009 at 6:41 pm

Well there was Ostwald vs Boltzmann re atomic theory. Acausality appears to be the only explanation for some of the “delayed choice” type of experiments we see in QM; it appears in more than Bell-type expts. There is no need for superluminality if you accept acausality and it appears that we have to. There is, however, a difference between asserting that there is no HV theory, and asserting that there is such a theory but we don’t have enough info to use it to predict. It might be that we can use it to predict better than QM in circumstances that don’t correspond to closed timelike loop paradoxes. That would be a real advance. I’m not saying “seek and you shall find” but I am saying “don’t seek and you definitely won’t find”.

Anton

April 18, 2009 at 7:21 pm

I’m not saying “don’t seek” either. But what I am saying is that sometimes you have to shut up and listen to the universe. That voice is easily drowned out by the noise of our philosophical expectations.

And I think answers will pop up even when they are not specifically sought. So I don’t think there is any special need to seek a way to reduce quantum randomness even if it exists. The universe speaks softly but constantly and consistently. The hard part is wrapping our monkey brains around what it has to say.

April 19, 2009 at 7:36 am

Dear ppnl: I agree that we need to be sensitive in a deep sense, but how we interpret data that come to us spontaneously, and what further experiments we choose to do so as to get further data, are governed by our (philosophical) expectations. As a theoretical physicist I want to improve testable prediction we make about the universe. Specifically, I want to know whether my upper or my lower apparatus will go PING the next time I turn on my Stern-Gerlach apparatus. What you are asserting is equivalent to saying that I may not ask that question. I believe it is the job of theoretical physicists to ask such questions. I acknowledge your freedom to believe that there is no answer and to work instead on other areas of human endeavour.

Anton

April 20, 2009 at 11:01 am

Concerning Maxwell’s demon. As soon as the demon allows one “fast” particle through his door from A to B (and equilibrium is re-established in A and B) a pressure differential is created. To allow the next particle through, work must be done to open the door. As more and more particles are allowed through, more work needed to open the door each time.

Thus it is essential to input work to produce a decrease in entropy, as indicated earlier by Chris.

Why couldn’t Maxwell see this simple explanation, or was he a joker ?

April 20, 2009 at 12:04 pm

It’s a microscopic door and Pressure is a macroscopic phenomenon so it does not manifest itself on this scale. There is empty space between the atoms.

April 20, 2009 at 6:29 pm

telescoper: “It’s a microscopic door and Pressure is a macroscopic phenomenon so it does not manifest itself on this scale. There is empty space between the atoms”.

This I appreciate. However, I’d like to know the size of the microscopic door you envisage ? A door which is so small that it is only approached by a suitable particle once/sec is not much use ! I’m impatient, I need to be able to see a measurable effect within an hour.

I suppose, to answer my own question, you could use an army of demons (10^20 ?), but that’s cheating (?).

I need to contemplate a bit more !

April 3, 2017 at 2:27 pm

[…] with respect to Bayesian inference, and related applications to physics and other things, such as thermodynamics, so in that light here’s a paper I stumbled across yesterday. It’s not a brand new […]